How to 10x your A/B testing velocity without devs (Experiments you can run now)

A/B testing sounds simple — until you actually try to run one.

You want to test a push headline, tweak an in-app message CTA, or see whether timing or channel impacts conversion. But instead of launching an experiment, you’re filing a ticket, waiting for a sprint, and negotiating priorities with engineering. By the time the test goes live, the insight is already late.

Growth belongs to teams that learn fastest. When every messaging experiment depends on a dev sprint, experiment velocity drops — and so does a PMM’s ability to drive measurable impact.

In this blog post, we’ll show how marketers can take ownership of communication experiments without touching the app’s codebase.

The hidden cost of dev-dependent testing

When communication testing depends on dev cycles, PMMs don’t just lose time — they lose revenue, learning speed, and momentum. Experiments get delayed, insights arrive late, and optimization quietly stalls.

To make this gap tangible, here’s how dev-dependent testing compares to self-service testing in practice:

| Test type | With a dev dependency | Autonomous testing |

|---|---|---|

| Change push notification copy | 2-3 weeks | 15 minutes |

| Test 3 different CTAs | 2-3 weeks + 3 separate deploys | 15 minutes (one setup) |

| Experiment with send timing | Requires code changes | Automatic scheduling |

| Compare push vs. in-app vs. email for better conversion | Multiple sprint cycles | Single journey with A/B split |

Looks like worth giving a shot, right?

Removing the dev dependency from communication testing is no longer a nice-to-have. It’s foundational for modern, high-velocity marketing.

Let’s look at how teams move from this model to autonomous communication testing, and what that unlocks in practice.

Solution: A/B/n testing inside journeys (not code)

The breakthrough isn’t just making testing faster; it’s removing the dependency entirely.

Modern customer engagement platforms separate communication logic from your app’s codebase. Instead of hardcoding messages into your app, you build them in an external system that your app queries at runtime. This architectural shift means PMMs can create, test, and optimize messaging without ever needing engineering resources.

How journey-based testing changes the game

Traditional A/B testing treats each message as an isolated experiment. You test Push A versus Push B, pick a winner, move on.

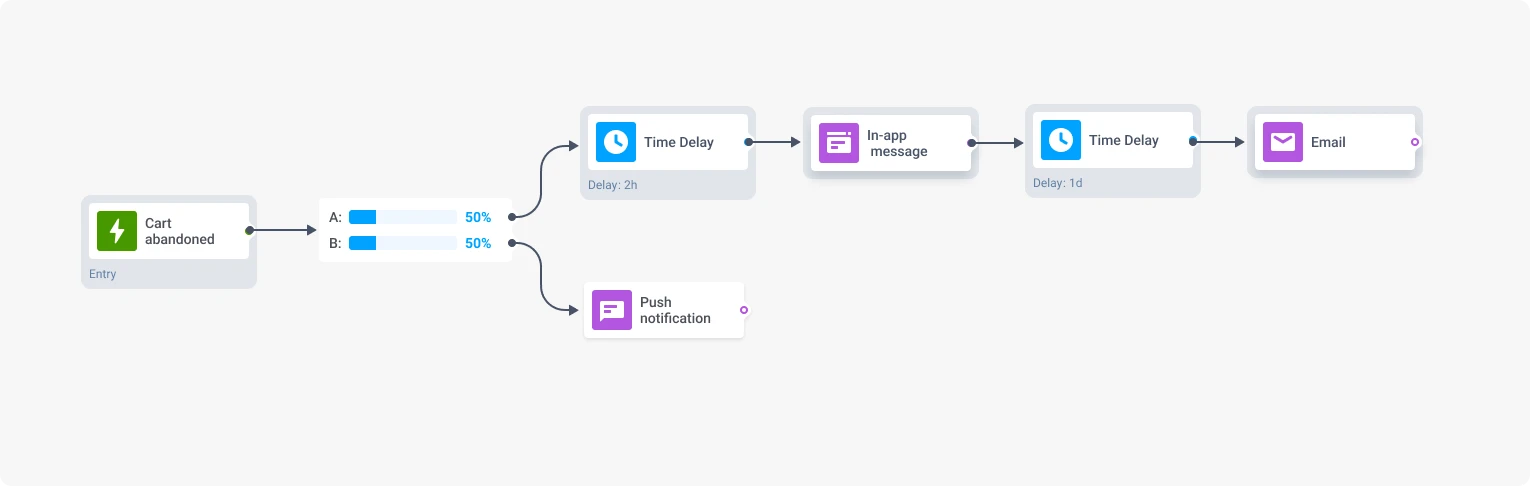

In Customer Journey Builder, you can use A/B/n split to test entire communication strategies.

Instead of testing: “Which push notification headline works better?”

You can test: “Should high-value users who abandon cart get an immediate push with urgency framing, or should they get an in-app message after 2 hours with a discount code, followed by an email if they don’t convert within 24 hours?”

That’s not one variable — that’s channel, timing, copy, and sequence logic all tested together to find the optimal path to conversion.

The platform handles:

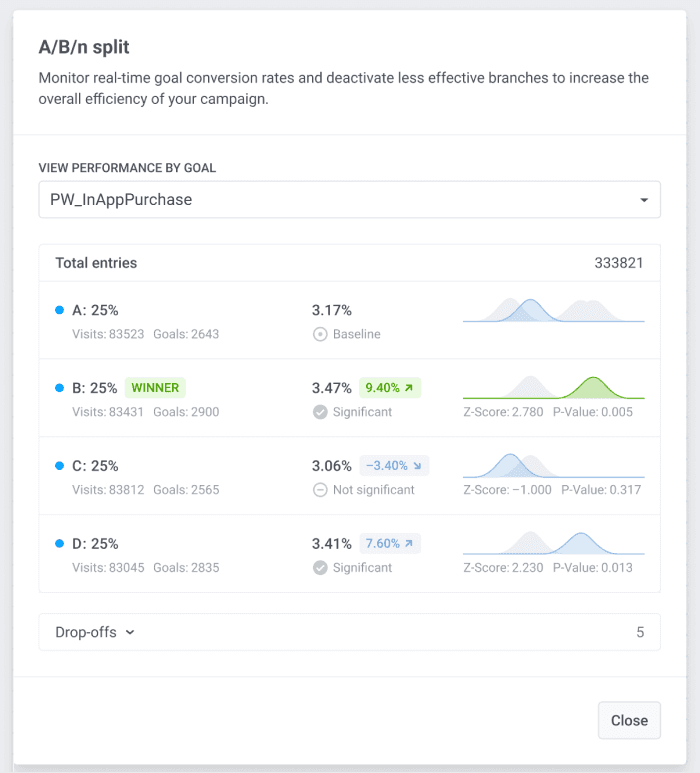

- Random traffic distribution (ensuring each variant gets a statistically valid sample)

- Real-time performance tracking (engagement rates, goal completion, statistical significance)

- Automatic winner detection (when a variant reaches confidence threshold)

- Seamless scaling (turn off losers, scale winners to 100% with one click)

Every hypothesis can be validated before you commit and scale.

What you can (and should) test without dev work

Here’s the full scope of what becomes testable when you’re no longer dependent on engineering:

Message content and creative:

- Headlines, body copy, CTAs

- Tone and voice (urgent vs. conversational, benefit vs. feature-focused)

- Personalization depth (generic vs. name-based vs. behavior-based)

- Value proposition framing (discount vs. exclusivity vs. FOMO)

- Rich media (image vs. no image, GIF vs. static, video vs. text)

Channel and format:

- Push notification vs. in-app message vs. email vs. SMS

- Single channel vs. multi-channel sequences

- Deep link destinations (where users land after clicking)

Timing and triggers:

- Immediate vs. delayed delivery

- Optimal send times (morning vs. evening, weekday vs. weekend)

- Trigger events (cart abandonment vs. browsing behavior vs. time-since-last-open)

- Sequence cadence (message 2 after 2 hours vs. 24 hours vs. 3 days)

Results tied to real conversions

Here’s where journey-based testing gets serious: you don’t measure success by open rates or click rates. You measure it by business outcomes.

When you set up an A/B/n test in Customer Journey Builder, you define goal events tied to revenue:

Purchase_CompletedSubscription_StartedPremium_UpgradeCart_CheckoutTrial_Extended

The platform tracks which variant drives the most goal completions, calculates lift percentages, and provides statistical confidence in real time.

That’s autonomous testing. That’s how modern PMMs work.

Real no-code experiments you can run right now

Theory is nice. Specifics are better.

If you’re a PMM or a mobile marketer with access to a customer engagement platform like Pushwoosh, here are three experiments you can build and launch this week, no engineering required.

Each includes: the business challenge, what to test, how to structure it in Customer Journey Builder, and what you’ll learn.

Experiment 1: Cart abandonment recovery (E-commerce/retail)

The challenge: 60-80% of users who add items to cart never complete checkout. That’s massive revenue leakage. You need to recover those conversions, but you don’t know whether urgency, discounts, or reassurance works best for your audience.

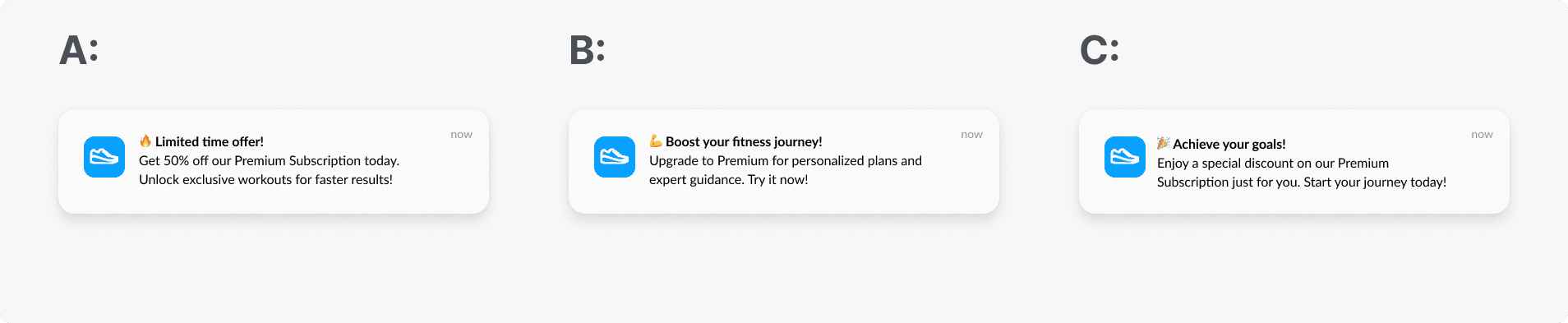

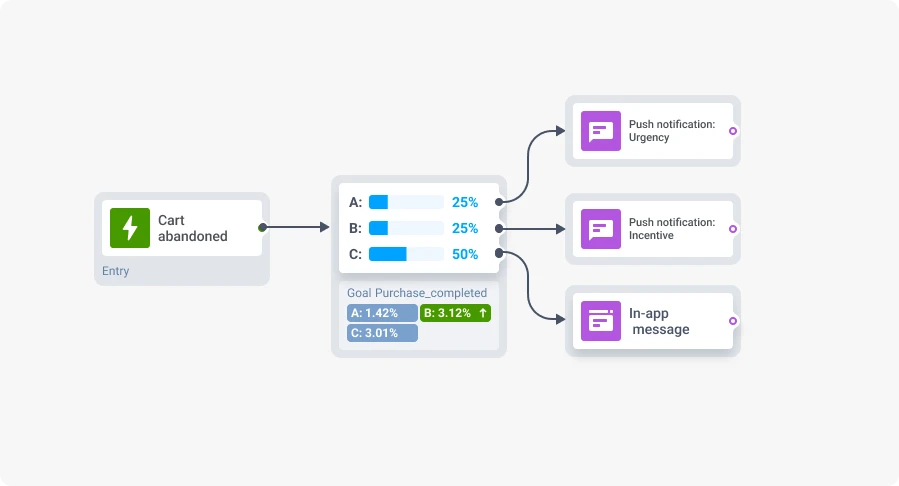

What you’re testing: Three different messaging strategies to bring users back to complete their purchase:

- Variant A: Urgency-based push (“Your cart expires in 3 hours!”)

- Variant B: Incentive-based push (“Complete your order and save 10%”)

- Variant C: In-app message with trust signals (“Free returns/Secure checkout/50k 5-star reviews”)

Entry trigger: Cart_Abandoned (or Added_to_Cart + user didn’t trigger Purchase_Completed within 1 hour)

Goal event: Purchase_Completed

What you’ll learn:

- Which psychological trigger (urgency vs. incentive vs. trust) resonates with your users

- Whether push notifications or in-app messages are more effective for conversion

- What messaging to scale across other revenue-critical journeys

Time to build: 30 minutes

Time to results: 5-7 days

No dev work required

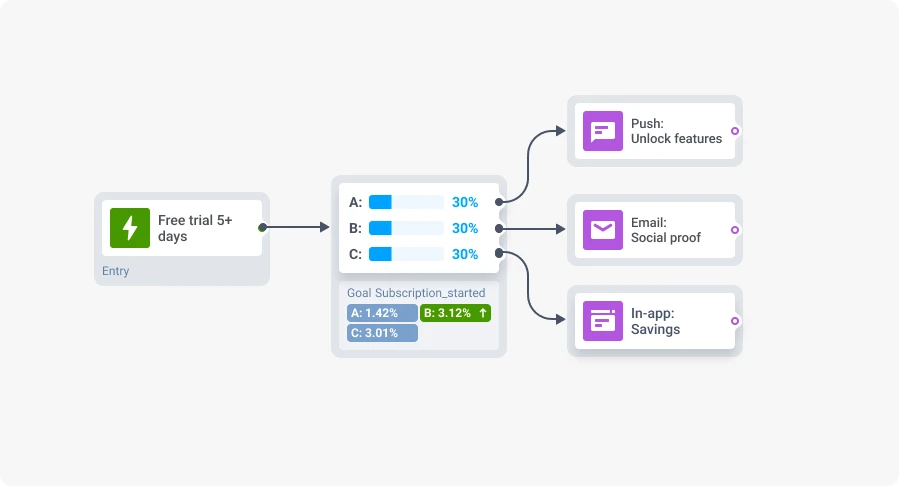

Experiment 2: Free trial to paid conversion (SaaS/fintech/news & media)

The challenge: Users sign up for free trials but don’t convert to paid subscriptions. You’re leaving subscription revenue on the table. You need to test different value propositions to see what drives upgrades.

What you’re testing: Three different approaches to converting trial users to premium subscribers:

- Variant A: Feature unlocks (“Upgrade to access all premium features”)

- Variant B: Social proof (“Join 100,000 premium members”)

- Variant C: Savings framing (“Save $60/year with our annual plan”)

Plus: Channel comparison (push vs. email vs. in-app)

Event: User reaches Day 5 of 7-day free trial (or custom milestone like “User completed 3rd workout” for fitness apps)

Goal event: Subscription_Started or Payment_Completed

What you’ll learn:

- Whether your users respond better to features, social proof, or economic incentives

- Which channel is most effective for driving subscription decisions (not just engagement)

Time to build: 45 minutes (including creating content for each channel)

Time to results: 10-14 days

No dev work required

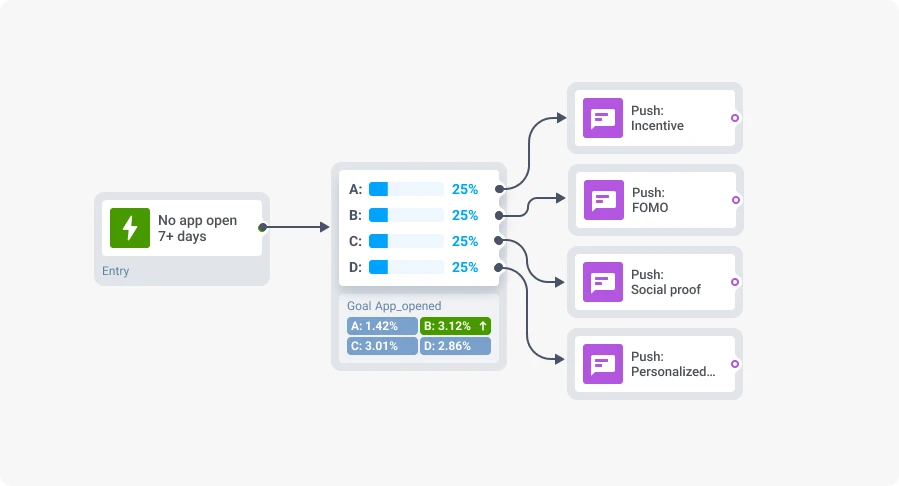

Experiment 3: Re-engagement for dormant users (Mobile games/media/social apps)

The challenge: Users who haven’t opened your app in the past 7+ days are at high risk of permanently churning. You need to bring them back, but generic “we miss you” messages don’t work. You want to test personalized incentives vs. FOMO vs. content-based hooks.

What you’re testing: Four different re-engagement strategies based on user behavior and value:

- Variant A: Incentive-based (bonus credits/currency)

- Variant B: FOMO-based (new content/features they’re missing)

- Variant C: Social proof (friends/community activity)

- Variant D: Personalized content (based on their past behavior)

Event: User has not triggered App_Opened in the last 7 days

Goal event: App_Opened (within 48 hours of message)

What you’ll learn:

- How to differentiate re-engagement strategy by user value (don’t treat everyone the same)

- Which psychological triggers work best for bringing back dormant users

Time to build: 30 minutes

Time to results: 5-7 days

No dev work required

Why these experiments work

Each of these journeys tests real marketing hypotheses that directly impact business metrics:

- Cart abandonment → Revenue recovery

- Trial conversion → Subscription growth

- Re-engagement → Retention and LTV

And each one is:

- Buildable in under an hour using visual journey tools

- Measurable with real conversion events, not vanity metrics

- Scalable immediately once you identify the winner

- Completely autonomous — no engineering, no sprints, no dependencies

This is what experiment velocity looks like when testing infrastructure matches the pace of marketing.

Start autonomous testing with Pushwoosh

While you’re waiting two weeks for a single test to clear the dev backlog, your competitors are running twenty.

Pushwoosh lets mobile teams run autonomous A/B/n tests inside real customer journeys across push notifications, in-app messages, email, timing, and CTAs without touching the app’s codebase.

If you’re done waiting on backlogs and ready to increase your experiment velocity, it’s time to see what self-service testing looks like in practice.

.webp)